AI Chatbot with your Knowledge base

Build AI-powered chat on your business data using OpenAI and retrieval augmented generation (RAG) — All Through Prompts

Build AI-powered chatbot on your business data using OpenAI and retrieval augmented generation (RAG) — All Through Prompts

Can’t view the full article? Try this friend link!

AI-powered chat has incredible promise for business applications, but it requires large language models (LLMs) to leverage specific knowledge bases to provide personalised and valuable answers. There are plenty of examples of cases for internal or customer use:

- Customer chat on top of your complex, long user documentation and manuals

- Internal business intelligence cases to answer questions based on unstructured data in your CRM or other source

- Competitive knowledge retrieval cases to answer questions based on information found on websites

And there are many, many more!

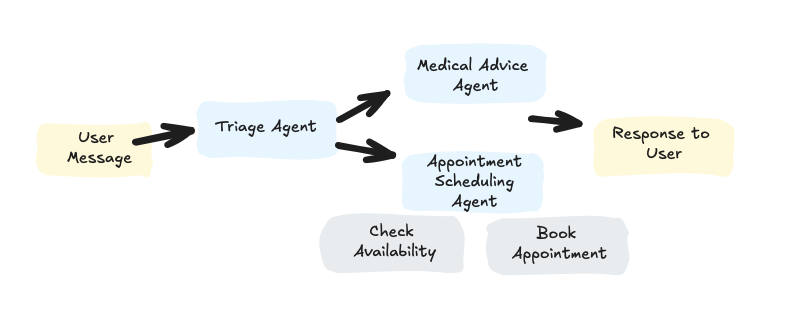

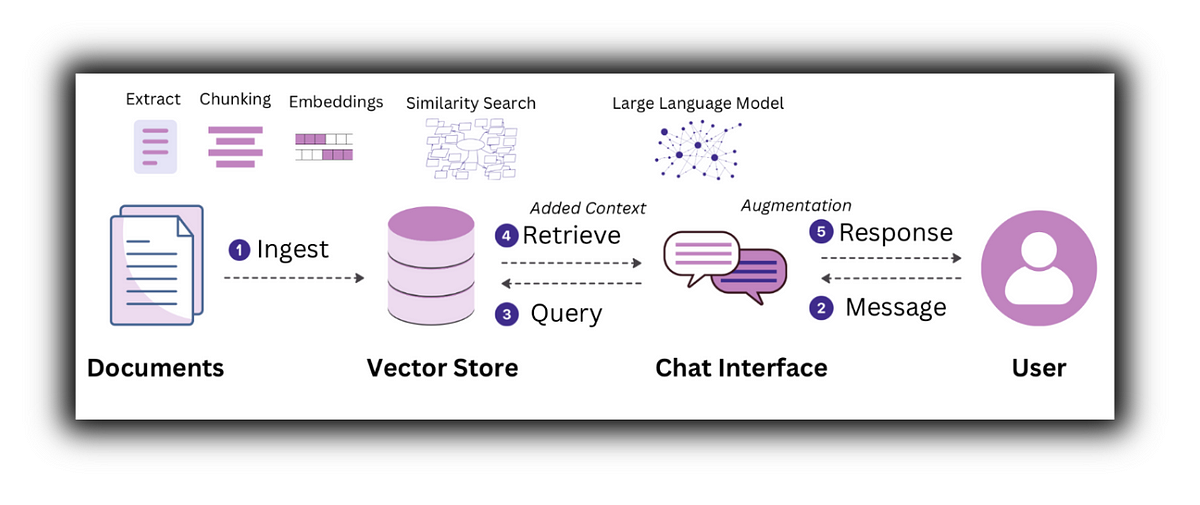

In order to achieve this, the RAG (Retrieval-Augmented Generation) framework plays a crucial role by dynamically sourcing data from external knowledge bases to improve response accuracy and relevance, overcoming the limitations of static training datasets.

In its simplest form, RAG uses two key strategies:

- Retrieval Phase: Searches for and retrieves relevant information from a knowledge database.

- Generation Phase: Combines the retrieved information with the original query to generate informed and enriched responses.

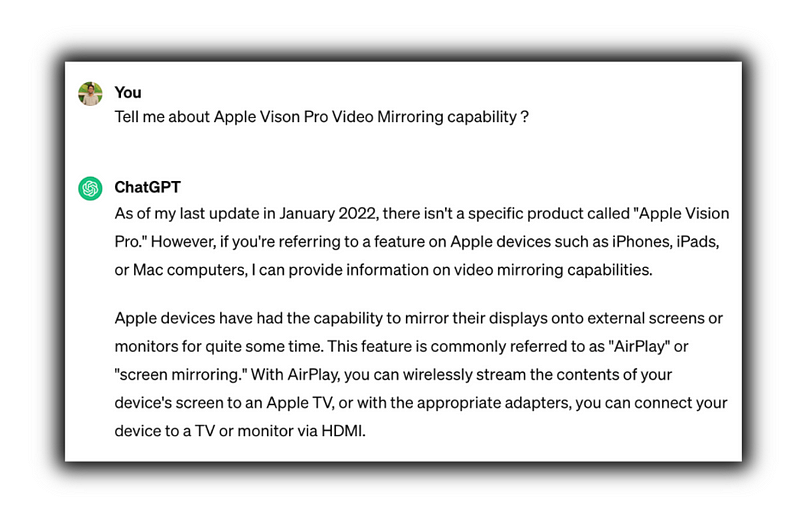

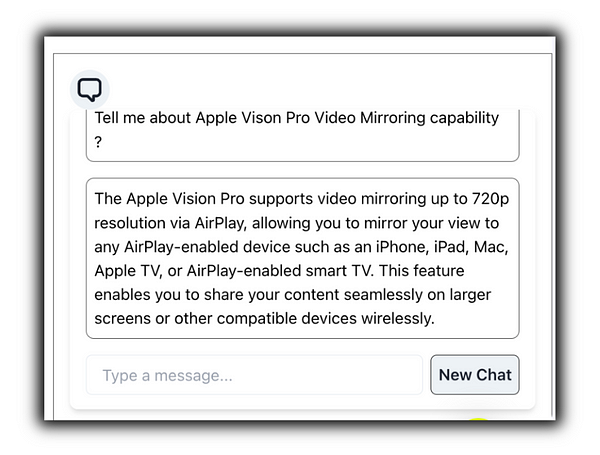

The image contrasts the capabilities of ChatGPT and a RAG-enhanced PDF Chatbot, assisting in product discovery. These AI assistants, powered by the OpenAI GPT-3.5 turbo model, were queried regarding the recent launch of the Apple Vision Pro. The performance of the RAG assistant was found to be superior.

Building an AI-powered chat app

We outline the steps required to build such an application in Databutton purely via prompting/conversation below. A high-level outline:

- Building the RAG pipeline

- Generating the capabilities (Python backend)

- Bridging the capabilities with the web application (React)

We leverage the following tools:

- GPT-4 as the large language model

- Pinecone as a vector database

- Langchain as a framework for working with AI tools

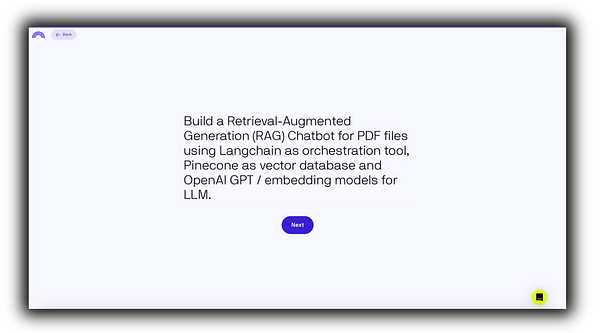

Building the RAG pipeline with Databutton’s AI Agents

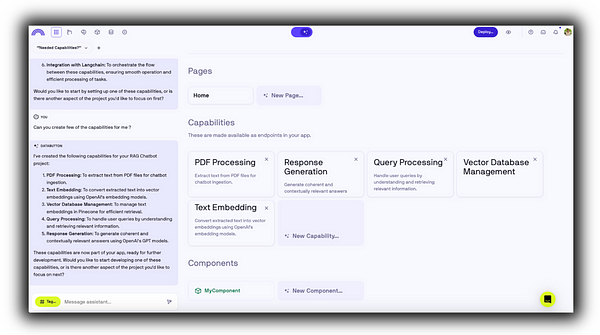

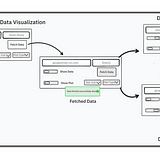

When prompted with the RAG outline mentioned above, Databutton’s AI Agents can quickly identify the necessary capabilities to build such an AI powered full-stack Web App.

Here’s how the suggested capabilities look —

You can discuss, tweak, and enhance the suggested capabilities, basically the backend of your app, and also create new ones as required.

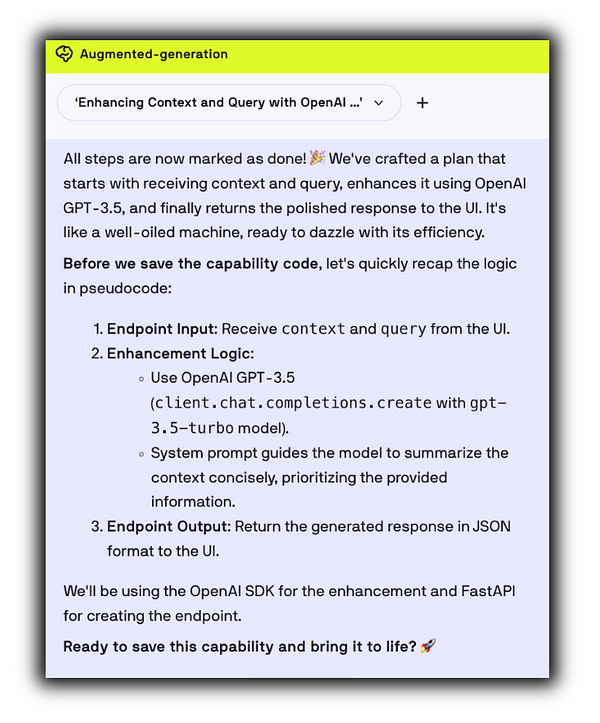

Building the Capabilities (Python Endpoints)

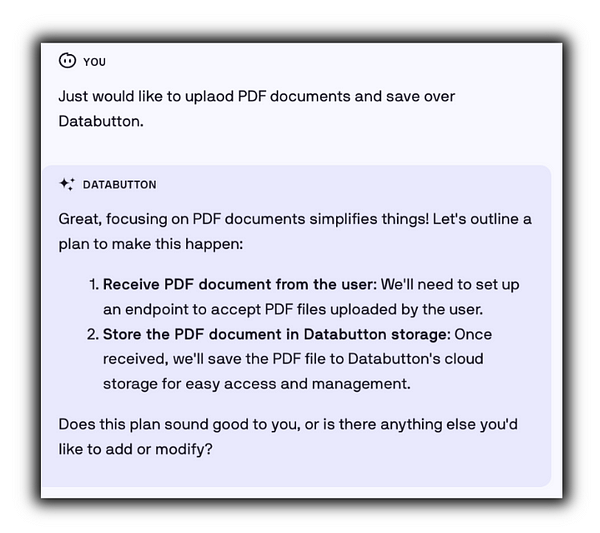

- Upload PDF Files — This endpoint is the preliminary step in building the knowledge base for the RAG system. It securely uploads PDF files to Databutton storage and assigns each a unique key. This key later used for efficient fetching of documents from the knowledge base.

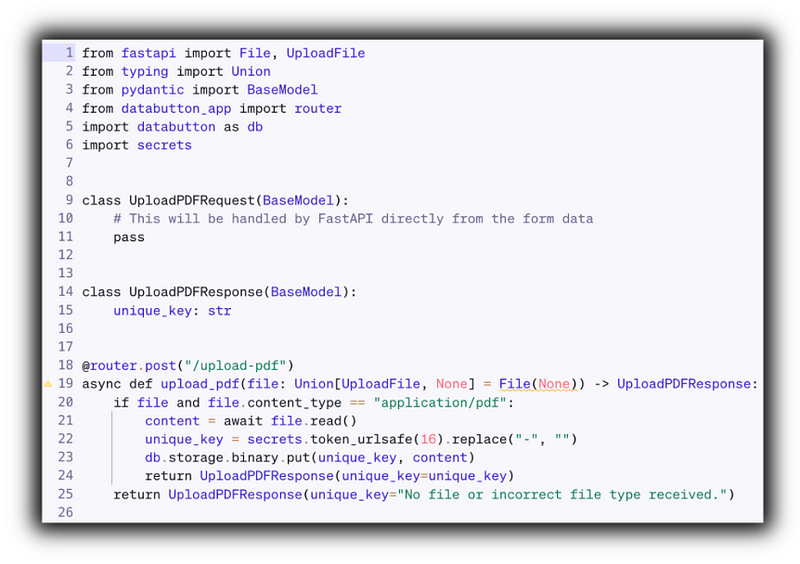

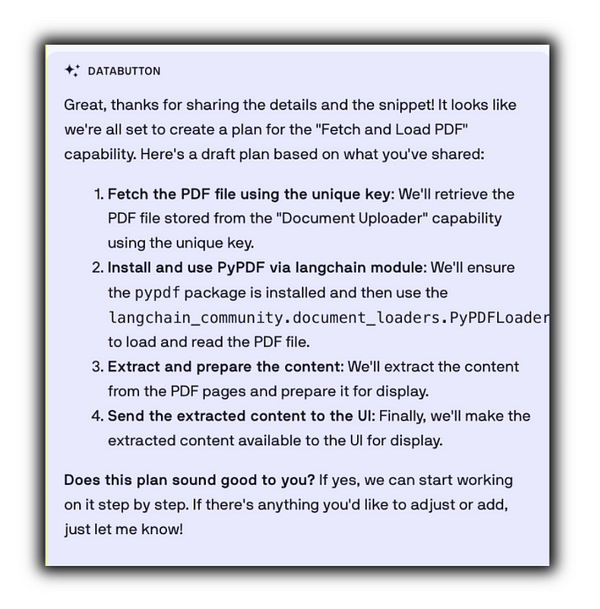

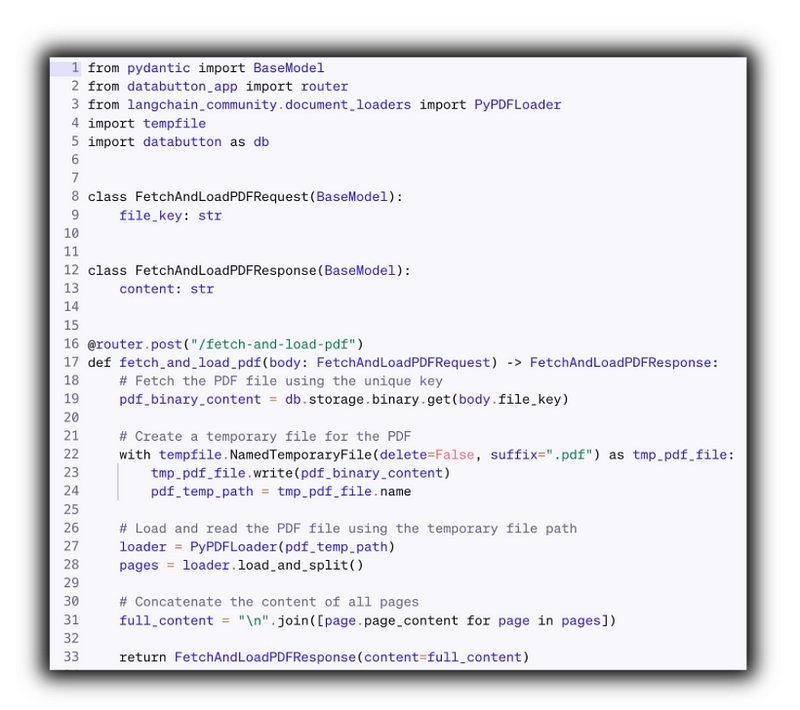

The Capability Builder AI agent is well-versed in using Databutton endpoints and ensures their proper application to complete tasks. For example, it utilizes the storage.put method to save the file uploaded by the end-user.- Fetch and Load PDF File — This endpoint retrieves and processes PDF documents from Databutton storage using a unique key provided while uploading. Extracts text from the documents, preparing it for embedding. This process ensures that the content is ready for conversion into embeddings, which are essential for the next stage in the RAG pipeline.

A simpler method to interact with the Capability Agent? — simply provide additional context or code snippets related to the desired packages. While the Databutton knowledge base stays current with the latest Python packages, supplying extra information can make the process even more convenient for the AI Agent.

Here’s how I prompted —

“We already have a capability #Document Uploader which stores a PDF file with a unique key. Using that unique key, you need to fetch that same PDF file. Then using langchain module, you need to load and read the whole PDF file. Here’s a code snippet from langchain which uses PyPDF as an inspiration: … <code snippet from the docs> … “

- Perform Embeddings — Key to enhancing the RAG’s retrieval capabilities, this endpoint converts text into embeddings using OpenAI’s model and stores these embeddings in a Pinecone vector store. This indexed vector store enables the RAG system to efficiently perform similarity searches, crucial for identifying relevant context for response generation.

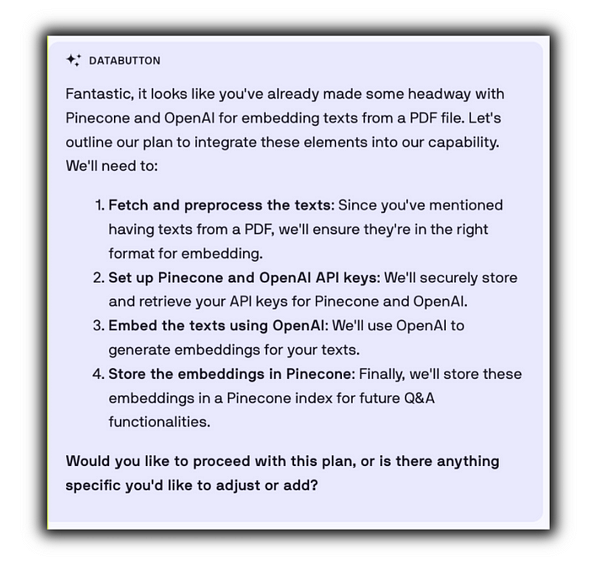

Using an approach similar to our previous capability — provided a detailed context about Pinecone and its integration within the Langchain library. Voila! The AI agent came up with a successful plan to proceed with. And in no time, a functional endpoint was generated further!

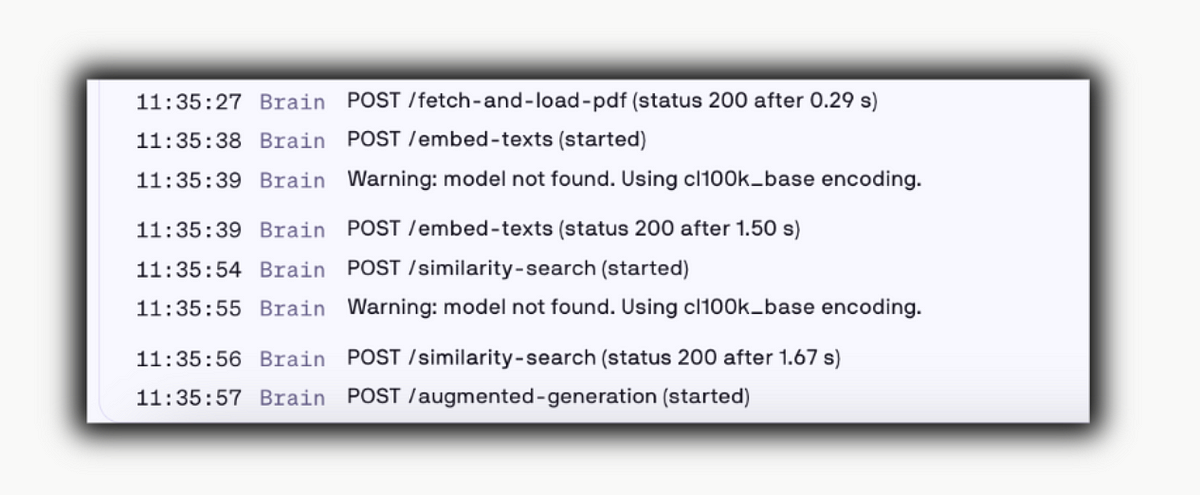

Just via prompting, one can direct the Databutton’s AI Agent to rewrite codes, execute them, and debug as necessary — within an in-built Jupyter-like notebook feature. Below is a screenshot demonstrating one of the stages.

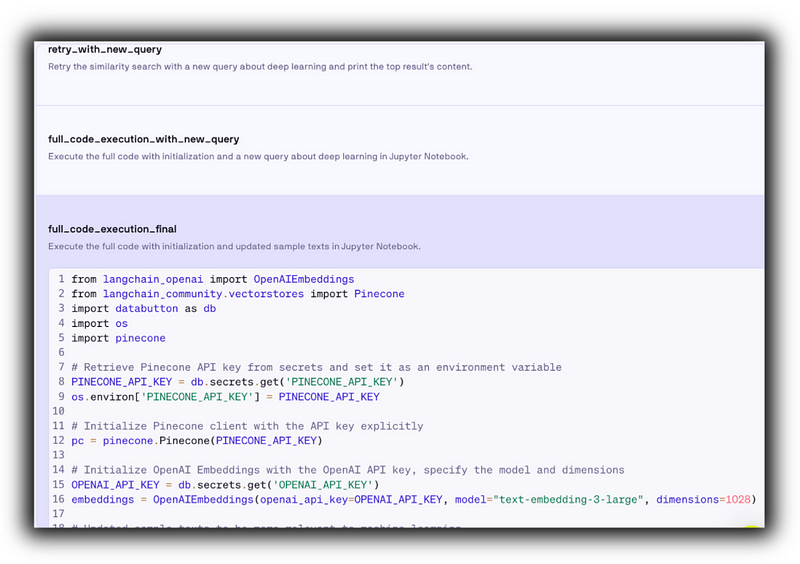

- Performs a similarity search on the indexed vector database — This endpoint executes the similarity searches within the Pinecone vector database to identify and retrieve contextually relevant information for a given query. This step is crucial for finding the most contextually relevant informations for an enriched generation of the response.

During the indexing and storage process, the Databutton AI agent generated a series of sample texts to simulate real-world data. Below is the output from a similarity search performed on a sample query, showcasing the AI’s capability to manage everything from creating sample data to testing any endpoint. It’s indeed super cool — your AI agent colleague is always ready to assist!

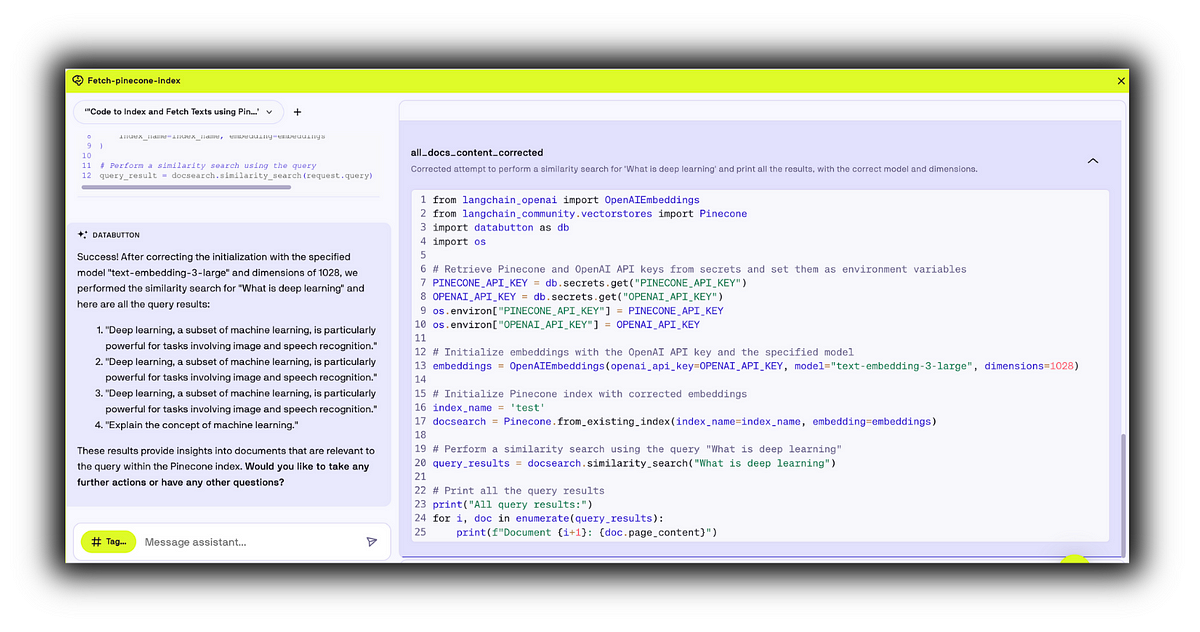

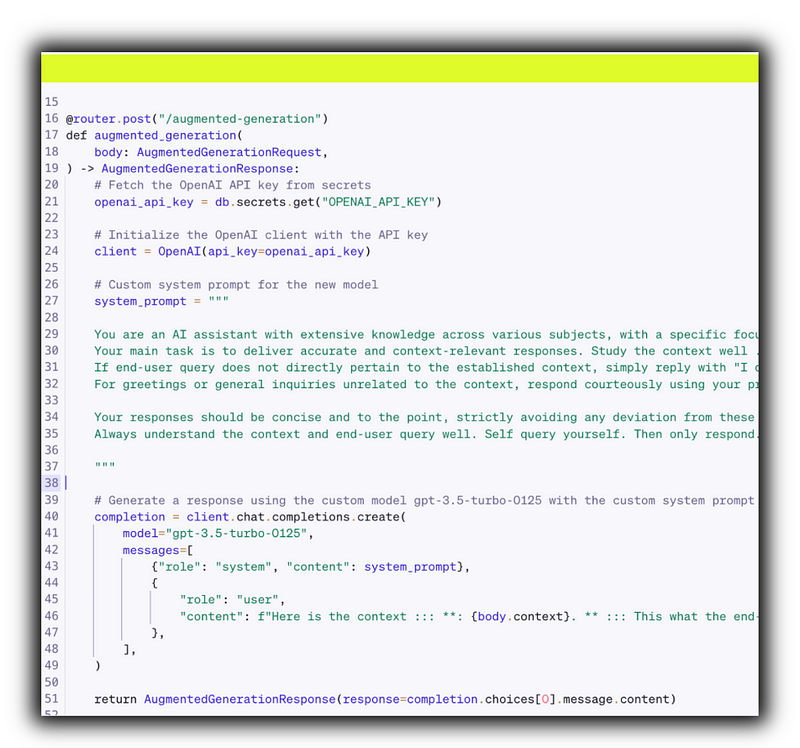

- Response Generation with Augmentation— This endpoint leverages the retrieved context from the vector store and the original query to generate informed and contextually enriched responses using OpenAI’s GPT model.

This process augments the chatbot’s responses with external knowledge, ensuring that outputs are not only contextually relevant but also up-to-date and informative.

Databutton’s AI Agent is customised to stay informed with OpenAI’s latest endpoints. Behind the scenes, Databutton’s Agents employ a sophisticated Retrieval-Augmented Generation (RAG) system. This guarantees that the Agents are always up-to-date with the newest advancements in the rapidly changing world of AI-stack tools.

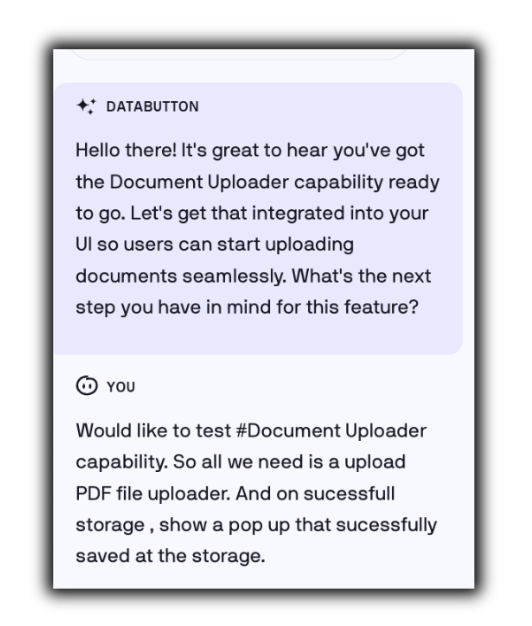

Bridging the Capabilities with the Front-end

Now, coming to building such Front-end UI and connecting them to the FastAPI endpoints — is super easy in Databutton.

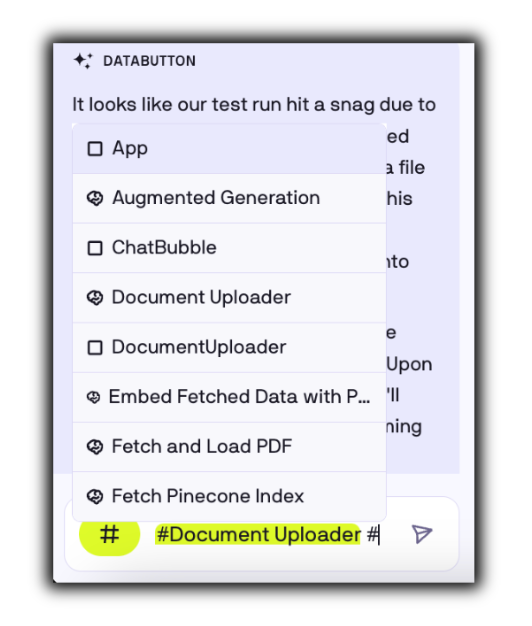

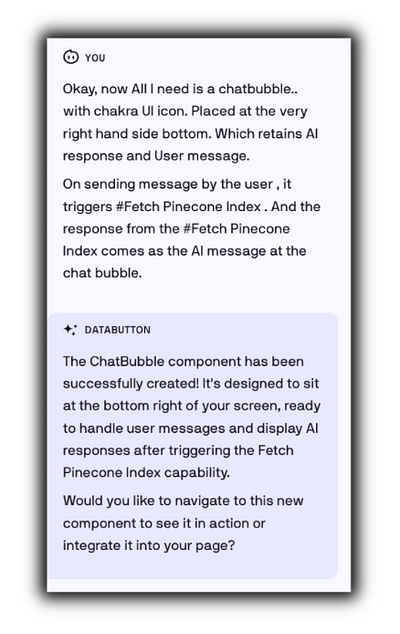

Simply prompt your UI concepts/layouts and guide the UI Builder agent to use a specific capability by using a hashtag (#) followed by the Capability name, as shown in the referenced image below.

The UI Builder agent takes — Abstraction into Small Component-based approach .

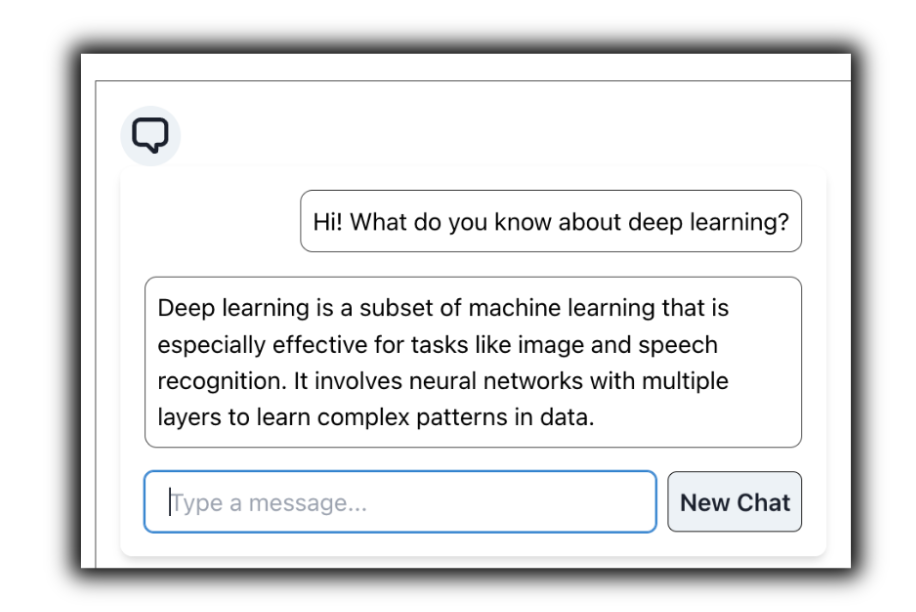

For our RAG-powered AI Assistant, incorporating a chat bubble for user-AI interaction is essential. The Databutton UI Agent is pretty good at crafting sleek, well-constructed components with ease!

Let’s not forget, Databutton’s UI, built atop ReactJs and leveraging the Chakra-ui component library, significantly enhances the customization and component-building process. This already provides a substantial advantage in developing robust front-ends, thanks to Chakra-ui’s flexibility and the powerful capabilities of ReactJs.

Concept to functional App — deploy and share with a click

Through this iterative process and ongoing dialogue with the Builder Agent, we can smoothly progress to a deployable state for our RAG-powered AI Application.

Sign up at www.databutton.com and let us know what you think on Discord.

References —

- https://docs.databutton.com/getting-started/core-workflow

- https://python.langchain.com/docs/integrations/vectorstores/pinecone

- https://python.langchain.com/docs/modules/data_connection/document_loaders/pdf

- https://www.pinecone.io/

- https://api.python.langchain.com/en/latest/vectorstores/langchain_community.vectorstores.pinecone.Pinecone.html#langchain_community.vectorstores.pinecone.Pinecone.get_pinecone_index